Researchers Shaojie Shen and Chuhao Lio from the Hong Kong University of Science and Technology have unveiled a new and intriguing innovation which uses holographic augmented reality hardware to generate live 3D terrain maps and allows drone pilots to simply point targets that have been visualised above any flat surface, reports virtualrealitytimes.com.

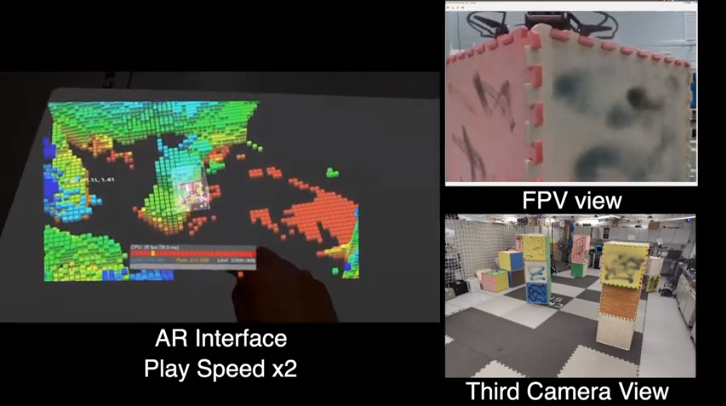

The holographic interface uses a combination of technologies to render this. For display, the Microsoft HoloLens headset generates the AR content in the form of a colourful voxel map which can be viewed from any angle. It relays this via an autonomous drone’s depth cameras as well as raycasting for real-time location data. The system provides the operator with a live and highly spatial sense of the environmental elevation as well as depth and it enables the drone to be easily seen from a third-person perspective and to be repositioned.

The HoloLens headset feeds the commands back to the drone and determines its next target inside the holographic map by turning the hand gestures and gazes of the wearer into a point-and-click-like controls. The autonomous drone can subsequently fly to the new location while updating the 3D map as it moves.

The researchers have released a demonstration video that looks more like something straight from a sci-fi film, particularly the holographic aspect. However, as a result of bandwidth limitations, the drone can only provide 3D map data to the Augmented Reality interface and does not relay the accompanying first-hand video.

The research team still has some way to go before holographic drone control system is deployed. The drone’s data was initially shared using Wi-Fi within an indoor testing space but for outdoor applications, it is possible to use low-latency 5G cellular connections once 5G networks have advanced beyond their current drone-limited phase.

The researchers noted the regular complaints from the first group of testers of the “very limited field of (AR) view”. This is an issue that the upcoming HoloLens 2 AR headset will address. The first group were also required to practice in order to be more proficient at 3D targeting in spite of the previous unfamiliarity of the Augmented Reality hardware, a situation that might spill over into an imperfect 3D UI or gesture recognition.

The 3D map data will, however, have better bandwidth efficiency than a live-person video. It may need just 272MB of data when it is updating 10 times per second. A live video requires 1.39GB of data to transmit a first-person video imagery at 30 frames per second. In the future, the team is also aiming to incorporate both stream types for the benefit of end users, helping optimise the data to satisfy the network’s minimum bandwidth levels.

In spite of these teething problems, the holographic Augmented Reality system shows much potential. Apart from its novel visual interface, it gives you the convenience of controlling a remote drone by simply using a simple, portable standalone Augmented Reality headset instead of having to rely on the conventional and fully-fledged computer, monitor and joystick.

The researchers are planning to present this “first step” in merging AR with autonomous drones at the International Conference on Intelligent Robots and Systems that is set to take place on October 25th-29th, 2020.

Watch video (half-way down page)